Habitat Rearrangement Challenge 2022

Overview

For NeurIPS 2022, we are hosting the Object Rearrangement challenge in the Habitat simulator 1. Object rearrangement focuses on mobile manipulation, low-level control, and task planning.

For details on how to participate, submit and train agents refer to github.com/facebookresearch/habitat-challenge/tree/rearrangement-challenge-2022 repository.

Task: Object Rearrangement

In the object rearrangement task, a Fetch robot is randomly spawned in an unseen environment and asked to rearrange a list of objects from initial to desired positions – picking/placing objects from receptacles (counter, sink, sofa, table) and opening/closing containers (drawers, fridges) as necessary. The task is communicated to the robot using the GeometricGoal specification, which provides the initial 3D center-of-mass position of each target object to be rearranged along with the desired 3D center-of-mass position for that object. An episode is successful if all target objects are within 15cm of their desired positions (without considering orientation).

The Fetch robot is equipped with an egocentric 256x256 90-degree FoV RGBD camera on the robot head. The agent also has access to idealized base-egomotion giving the relative displacement and angle of the base since the start of the episode. Additionally, the robot has proprioceptive joint sensing providing access to the current robot joint angles.

There are two tracks in the Habitat Rearrangement Challenge.

- Rearrange-Easy: The agent must rearrange one object. Furthermore, all containers (such as the fridge, cabinets, and drawers) start open, meaning the agent never needs to open containers to access objects or goals. The task planning in rearrange-easy is static with the same sequence of navigation to the object, picking the object, navigating to the goal, and then placing the object at the goal. The maximum episode length is 1500 time steps.

- Rearrange: The agent must rearrange one object, but containers may start closed or open. Since the object may start in closed receptacles, the agent may need to perform intermediate actions to access the object. For example, an apple may start in a closed fridge and have a goal position on the table. To rearrange the apple, the agent first needs to open the fridge before picking the apple. The agent is not provided with task information about if these intermediate open actions need to be executed. This information needs to be inferred from the egocentric observations and goal specification. The maximum episode length is 5000 time steps.

Dataset

For both tracks, there are the following dataset splits. All datasets use scenes from the ReplicaCAD dataset 1. Objects are from YCB 2, and the same object types are present in all datasets.

- train: 50k episodes specifying rearrangement problems across 63 scenes. Robot positions are procedurally generated in these episodes, giving an infinite number of training episodes.

- minival: 20 episodes for quick evaluation to ensure consistency between your local setup, Docker evaluation, and EvalAI.

- val: 1k episodes in a different 21 scenes from ReplicaCAD.

- test-standard: 1k episodes from 21 unseen scenes from ReplicaCAD for the public EvalAI leaderboard. There is no public access to this dataset.

- test-challenge: 1k episodes on the same 21 scenes from test. This dataset will be used to announce the competition winners.

Agent

We first describe the Fetch robot’s sensors and the information provided from these sensors as inputs to your policy in the challenge. Next, we describe the action space of the robot and the values your policy should output.

Agent Observations: The Fetch robot is equipped with an RGBD camera on the head, joint proprioceptive sensing, and egomotion. The task is specified as the starting position of the object and the goal position relative to the robot start in the scene. From these sensors, the task provides the following inputs:

- 256x256 90-degree FoV RGBD camera on the Fetch Robot head.

- The starting position of the object to rearrange relative to the robot’s end-effector in cartesian coordinates.

- The goal position relative to the robot’s end-effector in cartesian coordinates.

- The starting position of the object to rearrange relative to the robot’s base in polar coordinates.

- The goal position relative to the robot’s base in polar coordinates.

- The joint angles in radians of the seven joints on the Fetch arm.

- A binary indicator if the robot is holding an object (1 when holding an object, 0 otherwise). This indicates if the robot is holding any object, not just the target object.

Outputs: The robot can control its base and arm to interact with the world. The robot grasps objects through a suction gripper. If the tip of the suction gripper is in contact with an object, and the suction is engaged, the object will stick to the end of the gripper. The suction is capable of grasping any object in the scene and holding onto the handles of receptacles for opening or closing them.

- The relative displacement of the position target in radians for the PD controller. The robot controls all 7DoF on the arm.

- An indicator for if the robot should engage the suction on the arm. A value greater than or equal to 0 will engage the suction and attempt to grasp an object. A value less than 0 will disengage the suction.

Evaluation

The primary evaluation criteria is the Overall Success. We also include additional metrics to diagnose agent efficiency and partial progress.

- Success: If the target object is placed within 15cm of the goal position for the object. This is the primary metric used to judge submissions.

- Rearrangement Progress: The change in relative Euclidean distance of the object start to the goal from the start to the end of the episode.

- Time Taken: The amount of time (in seconds) needed to solve the episode.

The episode will terminate with failure if the agent accumulates more than 100kN of force throughout the episode or 10kN of instantaneous force.

Participation Guidelines

Participate in the contest by registering on the EvalAI challenge page [Coming Soon!] and creating a team. Participants will upload docker containers with their agents that are evaluated on an AWS GPU-enabled instance. Before pushing the submissions for remote evaluation, participants should test the submission docker locally to make sure it is working. Instructions for training, local evaluation, and online submission are provided below.

Valid challenge phases are habitat-rearrangement-{minival, test-standard, test-challenge}.

The challenge consists of the following phases:

- Minival phase: The purpose of this phase is sanity checking — to confirm that our remote evaluation reports the same result as the one you see locally. Each team is allowed a maximum of 100 submissions per day for this phase, but we will block and disqualify teams that spam our servers.

- Test Standard phase: The purpose of this phase/split is to serve as the public leaderboard establishing state-of-the-art; this is what should be used to report results in papers. Each team is allowed a maximum of 10 submissions per day for this phase, but again, please use them judiciously. Don’t overfit to the test set.

- Test Challenge phase: This split will be used to decide challenge winners. Each team is allowed a total of 5 submissions until the end of the challenge submission phase. The highest performing of these five will be automatically chosen. Results on this split will not be made public until the announcement of the final results at the NeurIPS 2022 competition.

Note: Your agent will be evaluated on 1000 episodes and will have a total available time of 48 hours to finish. Your submissions will be evaluated on AWS EC2 p2.xlarge instance, which has a Tesla K80 GPU (12 GB Memory), 4 CPU cores, and 61 GB RAM. If you need more time/resources to evaluate your submission, please get in touch.

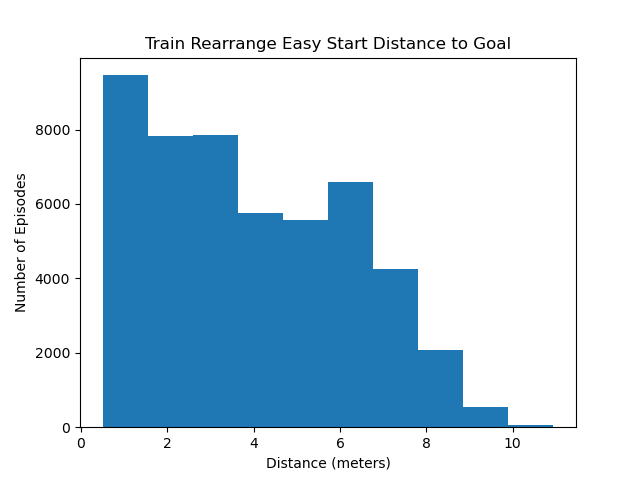

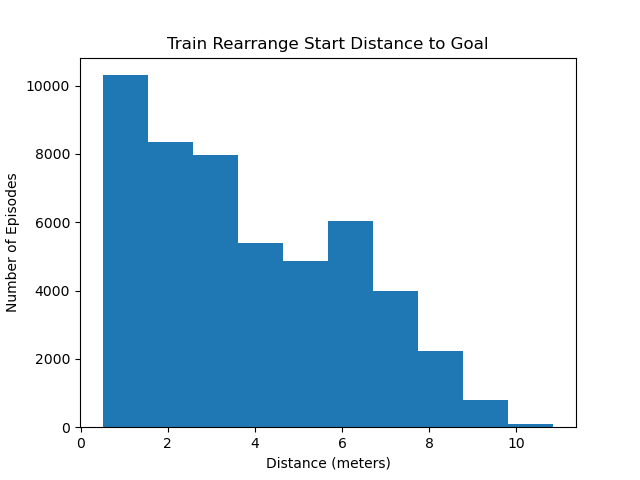

Dataset Analysis

The plots below visualize the distance between the starting object position and the goal position for each of the datasets in terms of meters.

Citing Habitat Rearrangement Challenge 2022

@misc{habitatrearrangechallenge2022, title = Habitat Rearrangement Challenge 2022, author = {Andrew Szot, Karmesh Yadav, Alex Clegg, Vincent-Pierre Berges, Aaron Gokaslan, Angel Chang, Manolis Savva, Zsolt Kira, Dhruv Batra}, howpublished = {\url{https://aihabitat.org/challenge/rearrange_2022}}, year = {2022} }

Acknowledgments

The Habitat challenge would not have been possible without the infrastructure and support of EvalAI team.

References

- 1.

- ^ a b Habitat 2.0: Training Home Assistants to Rearrange their Habitat. Andrew Szot, Alex Clegg, Eric Undersander, Erik Wijmans, Yili Zhao, John Turner, Noah Maestre, Mustafa Mukadam, Devendra Chaplot, Oleksandr Maksymets, Aaron Gokaslan, Vladimir Vondrus, Sameer Dharur, Franziska Meier, Wojciech Galuba, Angel Chang, Zsolt Kira, Vladlen Koltun, Jitendra Malik, Manolis Savva, Dhruv Batra. NeurIPS, 2021

- 2.

- ^ The ycb object and model set: Towards common benchmarks for manipulation research. Calli, B., Singh, A., Walsman, A., Srinivasa, S., Abbeel, P., & Dollar, A. M. (2015, July). In 2015 international conference on advanced robotics (ICAR)

Dates

| Challenge starts | Aug 8, 2022 |

| Test Phase Submissions Open | Aug 22, 2022 |

| Challenge submission deadline | Oct 20, 2022 |

Organizer

Participation

For details on how to participate, submit , and train agents, refer to github.com/facebookresearch/habitat-challenge/tree/rearrangement-challenge-2022 repository.

Please note that the latest submission to the test-challenge split will be used for final evaluation.

For updates on the competition, join the rearrange-challenge-neurips2022 Habitat Slack channel (available under Contact).

Sponsors