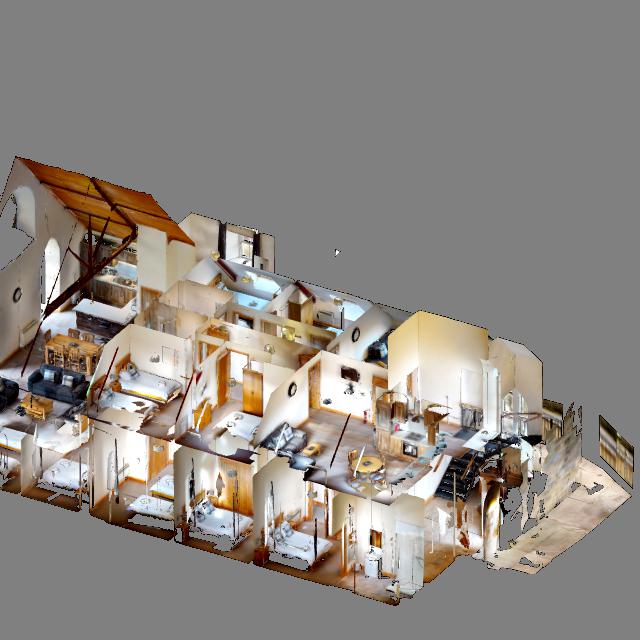

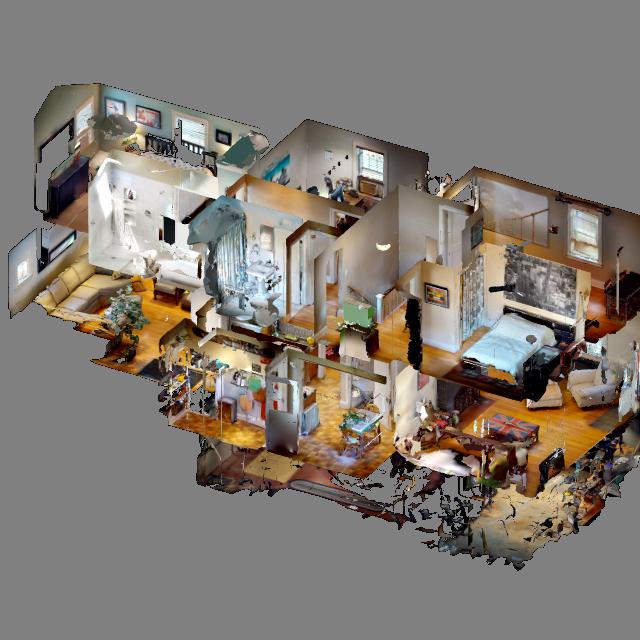

Habitat Matterport Dataset

The Habitat-Matterport 3D Research Dataset (HM3D) is the largest-ever dataset of 3D indoor spaces. It consists of 1,000 high-resolution 3D scans (or digital twins) of building-scale residential, commercial, and civic spaces generated from real-world environments.

HM3D is free and available here for academic, non-commercial research. Researchers can use it with FAIR’s Habitat simulator to train embodied agents, such as home robots and AI assistants, at scale.

Citing HM3D

If you use the HM3D dataset in your research, please cite the HM3D paper:

@inproceedings{ramakrishnan2021hm3d,

title={Habitat-Matterport 3D Dataset ({HM}3D): 1000 Large-scale 3D Environments for Embodied {AI}},

author={Santhosh Kumar Ramakrishnan and Aaron Gokaslan and Erik Wijmans and Oleksandr Maksymets and Alexander Clegg and John M Turner and Eric Undersander and Wojciech Galuba and Andrew Westbury and Angel X Chang and Manolis Savva and Yili Zhao and Dhruv Batra},

booktitle={Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track},

year={2021},

url={https://arxiv.org/abs/2109.08238}

}